Experts reveal new cybercrime scheme as scammers impersonate one mayor

By

Seia Ibanez

- Replies 9

In today’s advanced technology, it's no surprise that the tools we use for convenience and connection can also be exploited for nefarious purposes.

Imagine receiving a video call from a friend, family member, or a public figure you trust, only to find out later that you were speaking to an AI impersonator.

This is exactly what happened in the case of Sunshine Coast Mayor Rosanna Natoli, whose image was used without her consent in a ‘distressing and concerning’ incident.

Mayor Natoli recounted the incident, saying, ‘A friend of mine sent me a text saying, “This might sound strange, but did we just talk on Skype?”’

‘And my response was, “No, we did not,” and I picked up the phone immediately.’

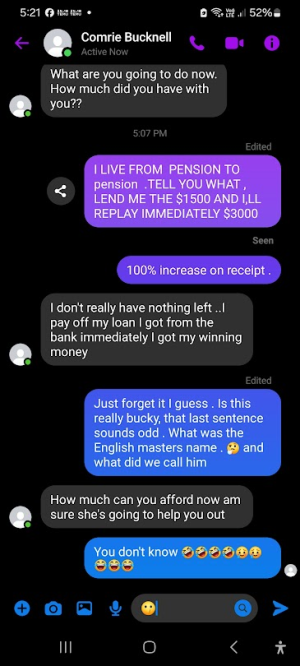

Mayor Natoli's name and image were being used on Facebook to create fake accounts that mirror her real posts.

‘The fake accounts look like my accounts because they are taking my posts and reposting,’ she said.

These accounts have amassed followers and have even gone as far as to solicit bank account details from unsuspecting victims.

‘Just recently, via Messenger, these people have been asking for bank account details,’ she said.

‘At first, I just thought, “Oh, that's a bit annoying,” but as it increased in its intensity and sophistication, that's when we brought it to the attention of the experts.’

Queensland Police Acting Superintendent Chris Toohey has noted that AI-related crimes have surged in recent years.

‘Cybercrime is an unfortunate consequence of us getting the benefits of making life easier with technology,’ he said.

‘It brings the fraudulent offenders into that realm, and they can operate well.’

‘It's about the trickery and the coercion through that almost grooming process, where they're starting that back and forth to gain the trust of people.’

Dr Declan Humphreys, a cybercrime expert from the University of the Sunshine Coast, explained that live facial-altering technology is so convincing that it requires a very discerning eye to spot fakes.

‘The AI can recognise the scammer's face; it then also has an overlay of the face that scammers are trying to impersonate,’ Dr Humphreys said.

‘Then it can manipulate that new face to match what the scammers are doing.’

‘It's using AI technology in real time to adapt and change their face to the face of the person who they're impersonating.’

Dr Humphreys added that the potential for scams is only ‘just beginning’.

‘We're seeing it particularly in the realm of romance scams, where traditionally people would use messaging or even phone calls to scam people out of money,’ he said.

These scams relied on messaging or phone calls, but now, AI enables scammers to create highly realistic video impersonations.

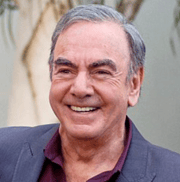

Celebrities and politicians like Mayor Natoli are prime targets due to the abundance of data available about them online.

However, even individuals with a small digital footprint are not immune.

‘The speed that AI is developing also means that these types of scams are developing really very quickly,’ Dr Humphreys said.

One alarming scam is the ‘Hi Mum’ scam, which originally involved criminals sending text messages to victims posing as their children in distress and urgently needing financial help.

However, the National Australian Bank (NAB) warned earlier this year that this scam has evolved and become more sophisticated and damaging.

‘Parents, for example, have gotten calls of scammers impersonating their sons or their daughters,’ Dr Humphreys added.

‘They've used snippets from their voice, used AI then to develop it into a realistic-sounding voice that parents even believe is their child.’

‘It's really possible for AI technology to take that snippet of what you're saying at your child's birthday party or amongst friends, then change it, track it and then make it into an AI program.’

Acting Superintendent Toohey urged anyone encountering suspicious accounts asking for money or personal details to cut contact immediately and report the incident to the police.

‘We have got an online set-up for them to go and make a complaint and we will then assess it straight away,’ he said.

‘Any information they can provide on a contact they've had, screenshots, any communication they can forward to us.’

While scammers are becoming more difficult to identify, they often share one common trait: they pressure victims to make quick decisions.

‘So, put the phone down, step up way for five or 10 minutes, talk to somebody else, ask them, “Does this sound real?”’ Dr Humphreys advised.

‘Then that distance can actually give you that clarity to think, “Oh, maybe this isn't exactly what I think it is.’”

Have you or someone you know encountered a similar scam? Share your experiences and tips for staying safe in the digital world in the comments below.

Have you or someone you know encountered a similar scam? Share your experiences and tips for staying safe in the digital world in the comments below.

Imagine receiving a video call from a friend, family member, or a public figure you trust, only to find out later that you were speaking to an AI impersonator.

This is exactly what happened in the case of Sunshine Coast Mayor Rosanna Natoli, whose image was used without her consent in a ‘distressing and concerning’ incident.

Mayor Natoli recounted the incident, saying, ‘A friend of mine sent me a text saying, “This might sound strange, but did we just talk on Skype?”’

‘And my response was, “No, we did not,” and I picked up the phone immediately.’

Mayor Natoli's name and image were being used on Facebook to create fake accounts that mirror her real posts.

‘The fake accounts look like my accounts because they are taking my posts and reposting,’ she said.

These accounts have amassed followers and have even gone as far as to solicit bank account details from unsuspecting victims.

‘Just recently, via Messenger, these people have been asking for bank account details,’ she said.

‘At first, I just thought, “Oh, that's a bit annoying,” but as it increased in its intensity and sophistication, that's when we brought it to the attention of the experts.’

Queensland Police Acting Superintendent Chris Toohey has noted that AI-related crimes have surged in recent years.

‘Cybercrime is an unfortunate consequence of us getting the benefits of making life easier with technology,’ he said.

‘It brings the fraudulent offenders into that realm, and they can operate well.’

‘It's about the trickery and the coercion through that almost grooming process, where they're starting that back and forth to gain the trust of people.’

Dr Declan Humphreys, a cybercrime expert from the University of the Sunshine Coast, explained that live facial-altering technology is so convincing that it requires a very discerning eye to spot fakes.

‘The AI can recognise the scammer's face; it then also has an overlay of the face that scammers are trying to impersonate,’ Dr Humphreys said.

‘Then it can manipulate that new face to match what the scammers are doing.’

‘It's using AI technology in real time to adapt and change their face to the face of the person who they're impersonating.’

Dr Humphreys added that the potential for scams is only ‘just beginning’.

‘We're seeing it particularly in the realm of romance scams, where traditionally people would use messaging or even phone calls to scam people out of money,’ he said.

These scams relied on messaging or phone calls, but now, AI enables scammers to create highly realistic video impersonations.

Celebrities and politicians like Mayor Natoli are prime targets due to the abundance of data available about them online.

However, even individuals with a small digital footprint are not immune.

‘The speed that AI is developing also means that these types of scams are developing really very quickly,’ Dr Humphreys said.

One alarming scam is the ‘Hi Mum’ scam, which originally involved criminals sending text messages to victims posing as their children in distress and urgently needing financial help.

However, the National Australian Bank (NAB) warned earlier this year that this scam has evolved and become more sophisticated and damaging.

‘Parents, for example, have gotten calls of scammers impersonating their sons or their daughters,’ Dr Humphreys added.

‘They've used snippets from their voice, used AI then to develop it into a realistic-sounding voice that parents even believe is their child.’

‘It's really possible for AI technology to take that snippet of what you're saying at your child's birthday party or amongst friends, then change it, track it and then make it into an AI program.’

Acting Superintendent Toohey urged anyone encountering suspicious accounts asking for money or personal details to cut contact immediately and report the incident to the police.

‘We have got an online set-up for them to go and make a complaint and we will then assess it straight away,’ he said.

‘Any information they can provide on a contact they've had, screenshots, any communication they can forward to us.’

While scammers are becoming more difficult to identify, they often share one common trait: they pressure victims to make quick decisions.

‘So, put the phone down, step up way for five or 10 minutes, talk to somebody else, ask them, “Does this sound real?”’ Dr Humphreys advised.

‘Then that distance can actually give you that clarity to think, “Oh, maybe this isn't exactly what I think it is.’”

Tip

If you suspect you've been targeted by a scam, contact your bank immediately and report the incident to Scamwatch. You may also read through our own Scam Watch forum to stay updated on the latest scams.

Key Takeaways

- Sunshine Coast Mayor Rosanna Natoli reported a sophisticated new cybercrime where artificial intelligence was used to impersonate her on live video calls.

- Cybercrime experts warned that live facial-altering technology is highly convincing and requires a discerning eye to spot fakes.

- Authorities are investing significant efforts into investigating these difficult-to-trace crimes, as scammers can operate from virtually anywhere in the world.

- Experts advised that a common tactic of scammers is to pressure victims into making quick decisions; consulting with others can provide clarity and prevent falling victim to such scams.